progetti:cloud-areapd:egi_federated_cloud:juno-ubuntu1404_testbed

Table of Contents

Juno-Ubuntu1404 Testbed

Fully integrated Resource Provider INFN-PADOVA-STACK in production since 28 July 2015.

EGI Monitoring

Local monitoring

Local dashboard

Layout

- Controller + Network node: egi-cloud.pd.infn.it

- Compute nodes: cloud-01:06.pn.pd.infn.it

- Network layout available here (authorized users only)

GlusterFS Configuration (To be updated)

- we assume that partitions are created by preseed, so in the Compute nodes do:

#on cloud-01:06: echo -e "n\np\n1\n\n\nw"|fdisk /dev/sdb; mkfs.xfs -f /dev/sdb1; mkdir -p /var/lib/nova/instances; echo "/dev/sdb1 /var/lib/nova/instances xfs defaults 0 2" >> /etc/fstab; mount -a # the it should be: df -h Filesystem Size Used Avail Use% Mounted on /dev/sda6 30G 8.5G 20G 31% / none 4.0K 0 4.0K 0% /sys/fs/cgroup udev 24G 4.0K 24G 1% /dev tmpfs 4.8G 2.8M 4.8G 1% /run none 5.0M 0 5.0M 0% /run/lock none 24G 0 24G 0% /run/shm none 100M 0 100M 0% /run/user /dev/sda7 600G 8.3G 592G 2% /export/glance /dev/sda8 1.3T 45M 1.3T 1% /export/cinder /dev/sdb1 1.9T 29G 1.8T 2% /var/lib/nova/instances /dev/sda1 95M 86M 1.7M 99% /boot # mkdir /export/glance/brick /export/cinder/brick mkdir -p /var/lib/glance/images /var/lib/cinder # apt-get -y install glusterfs-server # # now on cloud-01 only (see [[https://linuxsysadm.wordpress.com/2013/05/16/glusterfs-remove-extended-attributes-to-completely-remove-bricks/| here]] in case of issues): for i in `seq 11 16`; do gluster peer probe 192.168.115.$i; done for i in cinder; do gluster volume create ${i}volume replica 2 transport tcp 192.168.115.11:/export/$i/brick 192.168.115.12:/export/$i/brick 192.168.115.13:/export/$i/brick 192.168.115.14:/export/$i/brick 192.168.115.15:/export/$i/brick 192.168.115.16:/export/$i/brick; gluster volume start ${i}volume; done gluster volume info cat <<EOF >> /etc/fstab 192.168.115.11:/cindervolume /var/lib/cinder glusterfs defaults 1 1 EOF mount -a # the same using 192.168.115.12-16 on /etc/fstab other cloud-*, and: for i in `seq 11 16`; do gluster peer probe 192.168.115.$i; done

- In the Controller/Network node, after done on the Compute nodes just do:

apt-get -y install glusterfs-client mkdir -p /var/lib/glance/images /var/lib/cinder cat<<EOF>>/etc/fstab 192.168.115.11:/cindervolume /var/lib/cinder glusterfs defaults 1 1 EOF mount -a

- Example of df output on cloud-01:

[root@cloud-01 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda6 30G 8.5G 20G 31% / none 4.0K 0 4.0K 0% /sys/fs/cgroup udev 24G 4.0K 24G 1% /dev tmpfs 4.8G 2.8M 4.8G 1% /run none 5.0M 0 5.0M 0% /run/lock none 24G 0 24G 0% /run/shm none 100M 0 100M 0% /run/user /dev/sda7 600G 8.3G 592G 2% /export/glance /dev/sda8 1.3T 45M 1.3T 1% /export/cinder /dev/sdb1 1.9T 29G 1.8T 2% /var/lib/nova/instances /dev/sda1 95M 86M 1.7M 99% /boot 192.168.115.11:/cindervolume 3.7T 133M 3.7T 1% /var/lib/cinder 192.168.115.11:/cindervolume 3.7T 133M 3.7T 1% /var/lib/cinder/mnt/72fccc1699da9847d21965ee1e5c99bc

OpenStack configuration

- Controller/Network node and Compute nodes were installed according to OpenStack official documentation

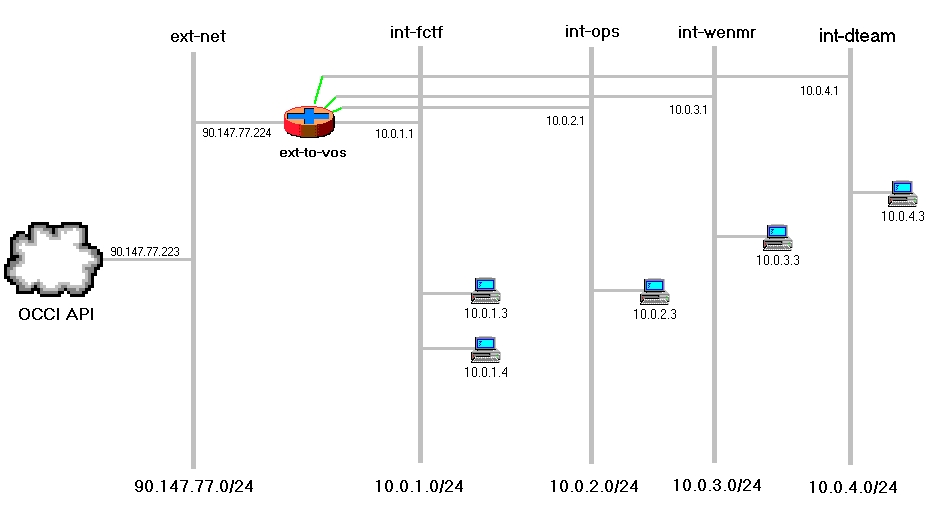

- We created one tenant for each EGI FedCloud VO supported, a router and various nets and subnets obtaining the following network topology:

EGI FedCloud specific configuration

(see EGI Doc)

- Install CAs Certificates and the software for fetching the CRLs in both Controller (egi-cloud) and Compute (cloud-01:06) nodes:

wget -q -O - https://dist.eugridpma.info/distribution/igtf/current/GPG-KEY-EUGridPMA-RPM-3 | apt-key add - echo "deb http://repository.egi.eu/sw/production/cas/1/current egi-igtf core" > /etc/apt/sources.list.d/egi-cas.list aptitude update apt-get install ca-policy-egi-core wget http://ftp.de.debian.org/debian/pool/main/f/fetch-crl/fetch-crl_2.8.5-2_all.deb dpkg -i fetch-crl_2.8.5-2_all.deb fetch-crl

Install OpenStack Keystone-VOMS module

- Prepare to run keystone as WSGI app in SSL

apt-get install python-m2crypto python-setuptools libvomsapi1 -y apt-get install apache2 libapache2-mod-wsgi -y a2enmod ssl cp /etc/grid-security/certificates/hostcert.pem /etc/ssl/certs/ cp /etc/grid-security/certificates/hostkey.pem /etc/ssl/private/ cat <<EOF>/etc/apache2/sites-enabled/keystone Listen 5000 WSGIDaemonProcess keystone user=keystone group=nogroup processes=8 threads=1 <VirtualHost _default_:5000> LogLevel warn ErrorLog \${APACHE_LOG_DIR}/error.log CustomLog \${APACHE_LOG_DIR}/ssl_access.log combined SSLEngine on SSLCertificateFile /etc/ssl/certs/hostcert.pem SSLCertificateKeyFile /etc/ssl/private/hostkey.pem SSLCACertificatePath /etc/ssl/certs SSLCARevocationPath /etc/ssl/certs SSLVerifyClient optional SSLVerifyDepth 10 SSLProtocol all -SSLv2 SSLCipherSuite ALL:!ADH:!EXPORT:!SSLv2:RC4+RSA:+HIGH:+MEDIUM:+LOW SSLOptions +StdEnvVars +ExportCertData WSGIScriptAlias / /usr/lib/cgi-bin/keystone/main WSGIProcessGroup keystone </VirtualHost> Listen 35357 WSGIDaemonProcess keystoneapi user=keystone group=nogroup processes=8 threads=1 <VirtualHost _default_:35357> LogLevel warn ErrorLog \${APACHE_LOG_DIR}/error.log CustomLog \${APACHE_LOG_DIR}/ssl_access.log combined SSLEngine on SSLCertificateFile /etc/ssl/certs/hostcert.pem SSLCertificateKeyFile /etc/ssl/private/hostkey.pem SSLCACertificatePath /etc/ssl/certs SSLCARevocationPath /etc/ssl/certs SSLVerifyClient optional SSLVerifyDepth 10 SSLProtocol all -SSLv2 SSLCipherSuite ALL:!ADH:!EXPORT:!SSLv2:RC4+RSA:+HIGH:+MEDIUM:+LOW SSLOptions +StdEnvVars +ExportCertData WSGIScriptAlias / /usr/lib/cgi-bin/keystone/admin WSGIProcessGroup keystoneapi </VirtualHost> EOF

- take the file keystone.py

- copy it to /usr/lib/cgi-bin/keystone/keystone.py and create the following links:

mkdir -p /usr/lib/cgi-bin/keystone wget https://raw.githubusercontent.com/openstack/keystone/stable/juno/httpd/keystone.py mv keystone.py /usr/lib/cgi-bin/keystone/keystone.py ln /usr/lib/cgi-bin/keystone/keystone.py /usr/lib/cgi-bin/keystone/main ln /usr/lib/cgi-bin/keystone/keystone.py /usr/lib/cgi-bin/keystone/admin echo export OPENSSL_ALLOW_PROXY_CERTS=1 >>/etc/apache2/envvars service apache2 restart

- Installing the Keystone-VOMS module:

git clone git://github.com/IFCA/keystone-voms.git -b stable/juno cd keystone-voms pip install .

- Enable the Keystone VOMS module

sed -i 's|#config_file=keystone-paste.ini|#config_file=/etc/keystone/keystone-paste.ini|g' /etc/keystone/keystone.conf cat <<EOF>>/etc/keystone/keystone-paste.ini [filter:voms] paste.filter_factory = keystone_voms.core:VomsAuthNMiddleware.factory EOF sed -i 's|ec2_extension user_crud_extension|voms ec2_extension user_crud_extension|g' /etc/keystone/keystone-paste.ini

- Configuring the Keystone VOMS module

cat<<EOF>>/etc/keystone/keystone.conf [voms] vomsdir_path = /etc/grid-security/vomsdir ca_path = /etc/grid-security/certificates voms_policy = /etc/keystone/voms.json vomsapi_lib = libvomsapi.so.1 autocreate_users = True add_roles = False user_roles = _member_ EOF # mkdir -p /etc/grid-security/vomsdir/fedcloud.egi.eu cat > /etc/grid-security/vomsdir/fedcloud.egi.eu/voms1.egee.cesnet.cz.lsc << EOF /DC=org/DC=terena/DC=tcs/OU=Domain Control Validated/CN=voms1.egee.cesnet.cz /C=NL/O=TERENA/CN=TERENA eScience SSL CA EOF cat > /etc/grid-security/vomsdir/fedcloud.egi.eu/voms2.grid.cesnet.cz.lsc << EOF /DC=org/DC=terena/DC=tcs/OU=Domain Control Validated/CN=voms2.grid.cesnet.cz /C=NL/ST=Noord-Holland/L=Amsterdam/O=TERENA/CN=TERENA eScience SSL CA 2 EOF mkdir -p /etc/grid-security/vomsdir/dteam cat > /etc/grid-security/vomsdir/dteam/voms.hellasgrid.gr.lsc << EOF /C=GR/O=HellasGrid/OU=hellasgrid.gr/CN=voms.hellasgrid.gr /C=GR/O=HellasGrid/OU=Certification Authorities/CN=HellasGrid CA 2006 EOF cat > /etc/grid-security/vomsdir/dteam/voms2.hellasgrid.gr.lsc << EOF /C=GR/O=HellasGrid/OU=hellasgrid.gr/CN=voms2.hellasgrid.gr /C=GR/O=HellasGrid/OU=Certification Authorities/CN=HellasGrid CA 2006 EOF mkdir -p /etc/grid-security/vomsdir/enmr.eu cat > /etc/grid-security/vomsdir/enmr.eu/voms2.cnaf.infn.it.lsc <<EOF /C=IT/O=INFN/OU=Host/L=CNAF/CN=voms2.cnaf.infn.it /C=IT/O=INFN/CN=INFN CA EOF cat > /etc/grid-security/vomsdir/enmr.eu/voms-02.pd.infn.it.lsc <<EOF /C=IT/O=INFN/OU=Host/L=Padova/CN=voms-02.pd.infn.it /C=IT/O=INFN/CN=INFN CA EOF for i in ops atlas lhcb cms do mkdir -p /etc/grid-security/vomsdir/$i cat > /etc/grid-security/vomsdir/$i/lcg-voms2.cern.ch.lsc << EOF /DC=ch/DC=cern/OU=computers/CN=lcg-voms2.cern.ch /DC=ch/DC=cern/CN=CERN Grid Certification Authority EOF cat > /etc/grid-security/vomsdir/$i/voms2.cern.ch.lsc << EOF /DC=ch/DC=cern/OU=computers/CN=voms2.cern.ch /DC=ch/DC=cern/CN=CERN Grid Certification Authority EOF done # cat <<EOF>/etc/keystone/voms.json { "fedcloud.egi.eu": { "tenant": "fctf" }, "ops": { "tenant": "ops" }, "enmr.eu": { "tenant": "wenmr" }, "dteam": { "tenant": "dteam" }, "atlas": { "tenant": "atlas" }, "lhcb": { "tenant": "lhcb" }, "cms": { "tenant": "cms" } } EOF # service apache2 restart

- Adjust manually the keystone catalog in order the identity backend points to the correct URLs:

- public URL: https://egi-cloud.pd.infn.it:5000/v2.0

- admin URL: https://egi-cloud.pd.infn.it:35357/v2.0

- internal URL: https://egi-cloud.pd.infn.it:5000/v2.0

mysql> use keystone; mysql> update endpoint set url="https://egi-cloud.pd.infn.it:5000/v2.0" where url="http://egi-cloud.pd.infn.it:5000/v2.0"; mysql> update endpoint set url="https://egi-cloud.pd.infn.it:35357/v2.0" where url="http://egi-cloud.pd.infn.it:35357/v2.0"; mysql> select id,url from endpoint; should show lines with the above URLs.

- Replace http with https in auth_[protocol,uri,url] variables and IP address with egi-cloud.pd.infn.it in auth_[host,uri,url] in /etc/nova/nova.conf, /etc/nova/api-paste.ini, /etc/neutron/neutron.conf, /etc/neutron/api-paste.ini, /etc/neutron/metadata_agent.ini, /etc/cinder/cinder.conf, /etc/cinder/api-paste.ini, /etc/glance/glance-api.conf, /etc/glance/glance-registry.conf, /etc/glance/glance-cache.conf and any other service that needs to check keystone tokens, and then restart the services of the Controller node

- Replace http with https in auth_[protocol,uri,url] variables and IP address with egi-cloud.pd.infn.it in auth_[host,uri,url] in /etc/nova/nova.conf and /etc/neutron/neutron.conf and restart the services openstack-nova-compute and neutron-openvswitch-agent of the Compute nodes.

- Do the following in both Controller and Compute nodes (see here):

cp /etc/grid-security/certificates/INFN-CA-2006.pem /usr/local/share/ca-certificates/INFN-CA-2006.crt update-ca-certificates

* Also check if "cafile" variable has INFN-CA-2006.pem in all service configuration files and admin-openrc.sh file.

Install the OCCI API

(only on Controller node)

pip install pyssf git clone https://github.com/EGI-FCTF/occi-os.git -b stable/juno cd occi-os python setup.py install cat <<EOF >>/etc/nova/api-paste.ini ######## # OCCI # ######## [composite:occiapi] use = egg:Paste#urlmap /: occiapppipe [pipeline:occiapppipe] pipeline = authtoken keystonecontext occiapp # with request body size limiting and rate limiting # pipeline = sizelimit authtoken keystonecontext ratelimit occiapp [app:occiapp] use = egg:openstackocci-juno#occi_app EOF

- Make sure the API occiapi is enabled in the /etc/nova/nova.conf configuration file:

crudini --set /etc/nova/nova.conf DEFAULT enabled_apis ec2,occiapi,osapi_compute,metadata crudini --set /etc/nova/nova.conf DEFAULT occiapi_listen_port 9000

- Add this line in /etc/nova/nova.conf (needed to allow floating-ip association via occi-client):

crudini --set /etc/nova/nova.conf DEFAULT default_floating_pool ext-net

- modify the /etc/nova/policy.json file in order to allow any user to get details about VMs not owned by her/him, while she/he cannot execute any other action (stop/suspend/pause/terminate/Â…) on them (see slide 7 here):

sed -i 's|"admin_or_owner": "is_admin:True or project_id:%(project_id)s",|"admin_or_owner": "is_admin:True or project_id:%(project_id)s",\n "admin_or_user": "is_admin:True or user_id:%(user_id)s",|g' /etc/nova/policy.json sed -i 's|"default": "rule:admin_or_owner",|"default": "rule:admin_or_user",|g' /etc/nova/policy.json sed -i 's|"compute:get_all": "",|"compute:get": "rule:admin_or_owner",\n "compute:get_all": "",|g' /etc/nova/policy.json

- and restart the nova-* services:

for i in nova-api nova-cert nova-consoleauth nova-scheduler nova-conductor nova-novncproxy; do service $i restart; done;

- Register service in Keystone:

keystone service-create --name occi_api --type occi --description 'Nova OCCI Service' keystone endpoint-create --service-id $(keystone service-list | awk '/ OCCI / {print $2}') --region regionOne --publicurl https://$HOSTNAME:8787/ --internalurl https://$HOSTNAME:8787/ --adminurl https://$HOSTNAME:8787/

- Enable SSL connection on port 8787, by creating the file /etc/apache2/sites-available/occi.conf

cat <<EOF>/etc/apache2/sites-available/occi.conf # # Proxy Server directives. Uncomment the following lines to # enable the proxy server: #LoadModule proxy_module /usr/lib/apache2/modules/mod_proxy.so #LoadModule proxy_http_module /usr/lib/apache2/modules/mod_proxy_http.so #LoadModule substitute_module /usr/lib/apache2/modules/mod_substitute.so Listen 8787 <VirtualHost _default_:8787> <Directory /> Require all granted </Directory> ServerName $EGIHOST LogLevel debug ErrorLog \${APACHE_LOG_DIR}/occi-error.log CustomLog \${APACHE_LOG_DIR}/occi-ssl_access.log combined SSLEngine on SSLCertificateFile /etc/ssl/certs/hostcert.pem SSLCertificateKeyFile /etc/ssl/private/hostkey.pem SSLCACertificatePath /etc/ssl/certs SSLCARevocationPath /etc/ssl/certs # SSLCARevocationCheck chain SSLVerifyClient optional SSLVerifyDepth 10 SSLProtocol all -SSLv2 SSLCipherSuite ALL:!ADH:!EXPORT:!SSLv2:RC4+RSA:+HIGH:+MEDIUM:+LOW SSLOptions +StdEnvVars +ExportCertData <IfModule mod_proxy.c> # Do not enable proxying with ProxyRequests until you have secured # your server. # Open proxy servers are dangerous both to your network and to the # Internet at large. ProxyRequests Off <Proxy *> #Order deny,allow #Deny from all Require all denied #Allow from .example.com </Proxy> ProxyPass / http://egi-cloud.pd.infn.it:9000/ connectiontimeout=600 timeout=600 ProxyPassReverse / http://egi-cloud.pd.infn.it:9000/ FilterDeclare OCCIFILTER FilterProvider OCCIFILTER SUBSTITUTE \"%{CONTENT_TYPE} = \'text/\'\" FilterProvider OCCIFILTER SUBSTITUTE \"%{CONTENT_TYPE} = \'application/\'\" # FilterProvider OCCIFILTER SUBSTITUTE resp=Content-Type $text/ # FilterProvider OCCIFILTER SUBSTITUTE resp=Content-Type $application/ <Location /> # AddOutputFilterByType SUBSTITUTE text/plain FilterChain OCCIFILTER Substitute s|http://$EGIHOST:9000|https://$EGIHOST:8787|n Require all granted </Location> </IfModule> </VirtualHost> EOF cat<<EOF>>/etc/sudoers.d/nova_sudoers nova ALL = (root) NOPASSWD: /usr/local/bin/nova-rootwrap /etc/nova/rootwrap.conf * EOF a2enmod proxy a2enmod proxy_http a2enmod substitute a2ensite occi service apache2 reload service apache2 restart

Install rOCCI Client

- We installed the rOCCI client on top of a EMI UI with small changes from this guide:

[root@prod-ui-02]# curl -L https://get.rvm.io | bash -s stable [root@prod-ui-02]# source /etc/profile.d/rvm.sh [root@prod-ui-02]# rvm install ruby [root@prod-ui-02]# gem install occi-cli

- As a normal user, an example of usage with basic commands is:

# create ssh-key for accessing VM as cloudadm: [prod-ui-02]# ssh-keygen -t rsa -b 2048 -f tmpfedcloud [prod-ui-02]# cat > tmpfedcloud.login << EOF #cloud-config users: - name: cloudadm sudo: ALL=(ALL) NOPASSWD:ALL lock-passwd: true ssh-import-id: cloudadm ssh-authorized-keys: - `cat tmpfedcloud.pub` EOF # create your VOMS proxy: [prod-ui-02]# voms-proxy-init -voms fedcloud.egi.eu -rfc ... # query the Cloud provider to see what is available (flavors and images): [prod-ui-02]# occi --endpoint https://egi-cloud.pd.infn.it:8787/ --auth x509 --voms --action describe --resource resource_tpl ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#m1-xlarge ]] title: Flavor: m1.xlarge term: m1-xlarge location: /m1-xlarge/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#m1-large ]] title: Flavor: m1.large term: m1-large location: /m1-large/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#hpc ]] title: Flavor: hpc term: hpc location: /hpc/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#1cpu-2gb-40dsk ]] title: Flavor: 1cpu-2gb-40dsk term: 1cpu-2gb-40dsk location: /1cpu-2gb-40dsk/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#m1-tiny ]] title: Flavor: m1.tiny term: m1-tiny location: /m1-tiny/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#m1-small ]] title: Flavor: m1.small term: m1-small location: /m1-small/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#1cpu-3gb-50dsk ]] title: Flavor: 1cpu-3gb-50dsk term: 1cpu-3gb-50dsk location: /1cpu-3gb-50dsk/ ##################################################################################################################### [[ http://schemas.openstack.org/template/resource#m1-medium ]] title: Flavor: m1.medium term: m1-medium location: /m1-medium/ ##################################################################################################################### # # # [prod-ui-02 ~]$ occi --endpoint https://egi-cloud.pd.infn.it:8787/ --auth x509 --voms --action describe --resource os_tpl ##################################################################################################################### [[ http://schemas.openstack.org/template/os#23993686-2895-4ecc-a1a0-853666f1d7b4 ]] title: Image: Image for CentOS 6 minimal [CentOS/6.x/KVM]_fctf term: 23993686-2895-4ecc-a1a0-853666f1d7b4 location: /23993686-2895-4ecc-a1a0-853666f1d7b4/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#9746d4be-09c4-4731-bb2e-451c9e3046b2 ]] title: Image: cirros-0.3.3-x86_64 term: 9746d4be-09c4-4731-bb2e-451c9e3046b2 location: /9746d4be-09c4-4731-bb2e-451c9e3046b2/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#0d3f5f9d-a59e-434d-9b99-70200e4346d6 ]] title: Image: Image for MoinMoin wiki [Ubuntu/14.04/KVM]_fctf term: 0d3f5f9d-a59e-434d-9b99-70200e4346d6 location: /0d3f5f9d-a59e-434d-9b99-70200e4346d6/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#793f1a26-3210-4cf3-b909-67a5ebbdeb1e ]] title: Image: Image for EGI Centos 6 [CentOS/6/KVM]_fctf term: 793f1a26-3210-4cf3-b909-67a5ebbdeb1e location: /793f1a26-3210-4cf3-b909-67a5ebbdeb1e/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#0d940c9c-53cb-4d01-8008-3589f5798114 ]] title: Image: Image for EGI Ubuntu 14.04 [Ubuntu/14.04/KVM]_fctf term: 0d940c9c-53cb-4d01-8008-3589f5798114 location: /0d940c9c-53cb-4d01-8008-3589f5798114/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#821a0b62-9abb-41a5-8079-317d969495a2 ]] title: Image: OS Disk Image_fctf term: 821a0b62-9abb-41a5-8079-317d969495a2 location: /821a0b62-9abb-41a5-8079-317d969495a2/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#9e498ce9-11f9-4ae3-beff-cdf072f3c85c ]] title: Image: Image for Ubuntu Server 14.04 LTS [Ubuntu/14.04 LTS/KVM]_fctf term: 9e498ce9-11f9-4ae3-beff-cdf072f3c85c location: /9e498ce9-11f9-4ae3-beff-cdf072f3c85c/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#0430a496-deba-4442-b674-3d6c5e585746 ]] title: Image: Image for DCI-Bridge [Debian/7.0/KVM]_fctf term: 0430a496-deba-4442-b674-3d6c5e585746 location: /0430a496-deba-4442-b674-3d6c5e585746/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#0e00db5c-cf59-4e72-afac-a9e4662d23a6 ]] title: Image: Image for COMPSs_LOFAR [Debian/7/KVM]_fctf term: 0e00db5c-cf59-4e72-afac-a9e4662d23a6 location: /0e00db5c-cf59-4e72-afac-a9e4662d23a6/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#951fab71-e599-4475-a068-27c309cd2948 ]] title: Image: Basic Ubuntu Server 12.04 LTS OS Disk Image_fctf term: 951fab71-e599-4475-a068-27c309cd2948 location: /951fab71-e599-4475-a068-27c309cd2948/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#55f18599-e863-491a-83d4-28823b0345c0 ]] title: Image: Image for COMPSs-PMES [Debian/7/KVM]_fctf term: 55f18599-e863-491a-83d4-28823b0345c0 location: /55f18599-e863-491a-83d4-28823b0345c0/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#c5c0f08f-096b-4ac2-980e-1fe6c0256e83 ]] title: Image: Image for EGI FedCloud Clients [Ubuntu/14.04/KVM]_fctf term: c5c0f08f-096b-4ac2-980e-1fe6c0256e83 location: /c5c0f08f-096b-4ac2-980e-1fe6c0256e83/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#05db8c2f-67a9-4e41-867a-7b5205ac4e4d ]] title: Image: Image for EGI Ubuntu 12.04 [Ubuntu/12.04/KVM]_fctf term: 05db8c2f-67a9-4e41-867a-7b5205ac4e4d location: /05db8c2f-67a9-4e41-867a-7b5205ac4e4d/ ##################################################################################################################### [[ http://schemas.openstack.org/template/os#28df1c95-8cf2-4d6b-94bb-0cf02a23f057 ]] title: Image: Image for CernVM [Scientific Linux/6.0/KVM]_fctf term: 28df1c95-8cf2-4d6b-94bb-0cf02a23f057 location: /28df1c95-8cf2-4d6b-94bb-0cf02a23f057/ ##################################################################################################################### # # create a VM of flavor "m1-medium" and OS "Ubuntu Server 14.04": [prod-ui-02]# occi --endpoint https://egi-cloud.pd.infn.it:8787/ --auth x509 --voms --action create -r compute -M resource_tpl#m1-medium -M os_tpl#9e498ce9-11f9-4ae3-beff-cdf072f3c85c --context user_data="file://$PWD/tmpfedcloud.login" --attribute occi.core.title="rOCCI-ubu" https://egi-cloud.pd.infn.it:8787/compute/<resourceID> # # assign a floating-ip to the VM: [prod-ui-02]# occi --endpoint https://egi-cloud.pd.infn.it:8787/ --auth x509 --voms --action link --resource /compute/<resourceID> --link /network/public # # discover the floating-ip assigned: [prod-ui-02]# occi --endpoint https://egi-cloud.pd.infn.it:8787/ --auth x509 --voms --action describe --resource /compute/<resourceID> ... occi.networkinterface.address = 90.147.77.226 occi.core.target = /network/public occi.core.source = /compute/<resourceID> occi.core.id = /network/interface/<net-resourceID> ... # # access the VM via ssh: [prod-ui-02]# ssh -i tmpfedcloud -p 22 cloudadm@90.147.77.226 Enter passphrase for key 'tmpfedcloud': Welcome to Ubuntu 14.04 ...

Install FedCloud BDII

- See the guide here

- Installing the resource bdii and the cloud-info-provider:

apt-get install bdii git clone https://github.com/EGI-FCTF/BDIIscripts cd BDIIscripts pip install .

- Customize the configuration file with the local sites' infos

cp BDIIscripts/etc/sample.openstack.yaml /etc/cloud-info-provider/openstack.yaml sed -i 's|#name: SITE_NAME|name: INFN-PADOVA-STACK|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#production_level: production|production_level: production|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#url: http://site.url.example.org/|#url: http://www.pd.infn.it|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#country: ES|country: IT|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#ngi: NGI_FOO|ngi: NGI_IT|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#latitude: 0.0|latitude: 45.41|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#longitude: 0.0|longitude: 11.89|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#general_contact: general-support@example.org|general_contact: cloud-prod@lists.pd.infn.it|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#security_contact: security-support@example.org|security_contact: grid-sec@pd.infn.it|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|#user_support_contact: user-support@example.org|user_support_contact: cloud-prod@lists.pd.infn.it|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|total_cores: 0|total_cores: 144|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|total_ram: 0|total_ram: 285|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|hypervisor: Foo Hypervisor|hypervisor: KVM Hypervisor|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|hypervisor_version: 0.0.0|hypervisor_version: 2.0.0|g' /etc/cloud-info-provider/openstack.yaml sed -i 's|middleware_version: havana|middleware_version: Juno|g' /etc/cloud-info-provider/openstack.yaml

- Be sure that keystone contains the OCCI endpoint, otherwise it will not be published by the BDII:

[root@egi-cloud ~]# keystone service-list [root@egi-cloud ~]# keystone service-create --name nova-occi --type occi --description 'Nova OCCI Service' [root@egi-cloud ~]# keystone endpoint-create --service_id <the one obtained above> --region RegionOne --publicurl https://$HOSTNAME:8787/ --internalurl https://$HOSTNAME:8787/ --adminurl https://$HOSTNAME:8787/

- By default, the provider script will filter images without marketplace uri defined into the marketplace or vmcatcher_event_ad_mpuri property. If you want to list all the images templates (included local snapshots), set the variable 'require_marketplace_id: false' under 'compute' → 'images' → 'defaults' in the YAML configuration file.

- Create the file /var/lib/bdii/gip/provider/cloud-info-provider that calls the provider with the correct options for your site, for example:

#!/bin/sh cloud-info-provider-service --yaml /etc/cloud-info-provider/openstack.yaml \ --middleware openstack \ --os-username <username> --os-password <passwd> \ --os-tenant-name <tenant> --os-auth-url <url>

- Run manually the cloud-info-provider script and check that the output return the complete LDIF. To do so, execute:

[root@egi-cloud ~]# chmod +x /var/lib/bdii/gip/provider/cloud-info-provider [root@egi-cloud ~]# /var/lib/bdii/gip/provider/cloud-info-provider

- Now you can start the bdii service:

[root@egi-cloud ~]# service bdii start

- Use the command below to see if the information is being published:

[root@egi-cloud ~]# ldapsearch -x -h localhost -p 2170 -b o=glue

- Information on how to set up the site-BDII in egi-cloud-sbdii.pd.infn.it is available here

- Add your cloud-info-provider to your site-BDII egi-cloud-sbdii.pd.infn.it by adding new lines in the site.def like this:

BDII_REGIONS="CLOUD BDII" BDII_CLOUD_URL="ldap://egi-cloud.pd.infn.it:2170/GLUE2GroupID=cloud,o=glue" BDII_BDII_URL="ldap://egi-cloud-sbdii.pd.infn.it:2170/mds-vo-name=resource,o=grid"

Install vmcatcher/glancepush

- VMcatcher allows users to subscribe to virtual machine Virtual Machine image lists, cache the images referenced to in the Virtual Machine Image List, validate the images list with x509 based public key cryptography, and validate the images against sha512 hashes in the images lists and provide events for further applications to process updates or expiries of virtual machine images without having to further validate the images (see this guide).

useradd -m -b /opt stack STACKHOME=/opt/stack apt-get install python-m2crypto python-setuptools qemu-utils -y pip install nose git clone https://github.com/hepix-virtualisation/hepixvmitrust.git -b hepixvmitrust-0.0.18 git clone https://github.com/hepix-virtualisation/smimeX509validation.git -b smimeX509validation-0.0.17 git clone https://github.com/hepix-virtualisation/vmcatcher.git -b vmcatcher-0.6.1 wget http://repository.egi.eu/community/software/python.glancepush/0.0.X/releases/generic/0.0.6/python-glancepush-0.0.6.tar.gz wget http://repository.egi.eu/community/software/openstack.handler.for.vmcatcher/0.0.X/releases/generic/0.0.7/gpvcmupdate-0.0.7.tar.gz tar -zxvf python-glancepush-0.0.6.tar.gz -C $STACKHOME/ tar -zxvf gpvcmupdate-0.0.7.tar.gz -C $STACKHOME/ for i in hepixvmitrust smimeX509validation vmcatcher $STACKHOME/python-glancepush-0.0.6 $STACKHOME/gpvcmupdate-0.0.7 do cd $i python setup.py install echo exit code=$? cd done mkdir -p /var/lib/swift/vmcatcher ln -fs /var/lib/swift/vmcatcher $STACKHOME/ mkdir -p $STACKHOME/vmcatcher/cache $STACKHOME/vmcatcher/cache/partial $STACKHOME/vmcatcher/cache/expired $STACKHOME/vmcatcher/tmp mkdir -p /var/spool/glancepush /var/log/glancepush/ /etc/glancepush /etc/glancepush/transform /etc/glancepush/meta /etc/glancepush/test /etc/glancepush/clouds cp /etc/keystone/voms.json /etc/glancepush/

- Now for each VO/tenant you have in voms.json write a file like this:

[root@egi-cloud ~]# su - stack [stack@egi-cloud ~]# cat << EOF > /etc/glancepush/clouds/dteam [general] # Tenant for this VO. Must match the tenant defined in voms.json file testing_tenant=dteam # Identity service endpoint (Keystone) endpoint_url=https://egi-cloud.pd.infn.it:35357/v2.0 # User Password password=ADMIN_PASS # User username=admin # Set this to true if you're NOT using self-signed certificates is_secure=True # SSH private key that will be used to perform policy checks (to be done) ssh_key=/opt/stack/.ssh/id_rsa # WARNING: Only define the next variable if you're going to need it. Otherwise you may encounter problems #cacert=path_to_your_cert EOF

- and for images not belonging to any VO use the admin tenant

[stack@egi-cloud ~]# cat << EOF > /etc/glancepush/clouds/openstack [general] # Tenant for this VO. Must match the tenant defined in voms.json file testing_tenant=admin # Identity service endpoint (Keystone) endpoint_url=https://egi-cloud.pd.infn.it:35357/v2.0 # User Password password=ADMIN_PASS # User username=admin # Set this to true if you're NOT using self-signed certificates is_secure=True # SSH private key that will be used to perform policy checks (to be done) ssh_key=/opt/stack/.ssh/id_rsa # WARNING: Only define the next variable if you're going to need it. Otherwise you may encounter problems #cacert=path_to_your_cert EOF

- Check that vmcatcher is running properly by listing and subscribing to an image list

[stack@egi-cloud ~]# cat <<EOF>>.bashrc export VMCATCHER_RDBMS="sqlite:///$STACKHOME/vmcatcher/vmcatcher.db" export VMCATCHER_CACHE_DIR_CACHE="$STACKHOME/vmcatcher/cache" export VMCATCHER_CACHE_DIR_DOWNLOAD="$STACKHOME/vmcatcher/cache/partial" export VMCATCHER_CACHE_DIR_EXPIRE="$STACKHOME/vmcatcher/cache/expired" EOF [stack@egi-cloud ~]# export VMCATCHER_RDBMS="sqlite:////opt/stack/vmcatcher/vmcatcher.db" [stack@egi-cloud ~]# vmcatcher_subscribe -l [stack@egi-cloud ~]# vmcatcher_subscribe -e -s https://<your EGI SSO token>:x-oauth-basic@vmcaster.appdb.egi.eu/store/vo/fedcloud.egi.eu/image.list [stack@ocp-ctrl ~]$ vmcatcher_subscribe -l 76fdee70-8119-5d33-9f40-3c57e1c60df1 True None https://vmcaster.appdb.egi.eu/store/vo/fedcloud.egi.eu/image.list

- Create a CRON wrapper for vmcatcher, named $STACKHOME/gpvcmupdate-0.0.7/vmcatcher_eventHndl_OS_cron.sh, using the following code:

#!/bin/bash #Cron handler for VMCatcher image syncronization script for OpenStack #Vmcatcher configuration variables export VMCATCHER_RDBMS="sqlite:///$STACKHOME/vmcatcher/vmcatcher.db" export VMCATCHER_CACHE_DIR_CACHE="$STACKHOME/vmcatcher/cache" export VMCATCHER_CACHE_DIR_DOWNLOAD="$STACKHOME/vmcatcher/cache/partial" export VMCATCHER_CACHE_DIR_EXPIRE="$STACKHOME/vmcatcher/cache/expired" export VMCATCHER_CACHE_EVENT="python /usr/local/bin/gpvcmupdate.py -D" #Update vmcatcher image lists /usr/local/bin/vmcatcher_subscribe -U #Add all the new images to the cache for a in $(/usr/local/bin/vmcatcher_image -l | awk '{if ($2==2) print $1}'); do /usr/local/bin/vmcatcher_image -a -u $a done #Update the cache /usr/local/bin/vmcatcher_cache -v -v #Run glancepush python /usr/local/bin/glancepush.py

- Add admin user to the tenants and set the right ownership to directories

[root@egi-cloud ~]# for vo in atlas cms lhcb dteam ops wenmr fctf; do keystone user-role-add --user admin --tenant $vo --role _member_; done [root@egi-cloud ~]# chown -R stack:stack $STACKHOME

- Test that the vmcatcher handler is working correctly by running:

[stack@egi-cloud ~]# chmod +x $STACKHOME/gpvcmupdate-0.0.7/vmcatcher_eventHndl_OS_cron.sh [stack@egi-cloud ~]# $STACKHOME/gpvcmupdate-0.0.7/vmcatcher_eventHndl_OS_cron.sh

- Add the following line to the stack user crontab:

50 */6 * * * $STACKHOME/gpvcmupdate-0.0.7/vmcatcher_eventHndl_OS_cron.sh >> /var/log/glancepush/vmcatcher.log 2>&1

- Useful links for getting VO-wide image lists that need authentication to AppDB: Vmcatcher setup, Obtaining an access token,Image list store.

Use the same APEL/SSM of grid site

- Cloud usage records are sent to APEL through the ssmsend program installed in cert-37.pd.infn.it:

[root@cert-37 ~]# cat /etc/cron.d/ssm-cloud # send buffered usage records to APEL 30 */24 * * * root /usr/bin/ssmsend -c /etc/apel/sender-cloud.cfg

- It si therefore neede to install and configure NFS on egi-cloud:

[root@egi-cloud ~]# mkdir -p /var/spool/apel/outgoing/openstack [root@egi-cloud ~]# apt-get install nfs-kernel-server -y [root@egi-cloud ~]# cat<<EOF>>/etc/exports /var/spool/apel/outgoing/openstack cert-37.pd.infn.it(rw,sync) EOF [root@egi-cloud ~]$ service nfs-kernel-server restart

- In case of APEL nagios probe failure, check if /var/spool/apel/outgoing/openstack is properly mounted by cert-37

- To check if accounting records are properly received by APEL server look at this site

Install the new accounting system (CASO)

- Following instructions here

[root@egi-cloud ~]$ pip install caso

- Copy the CA certs bundle in the right place

[root@egi-cloud ~]# cd /usr/lib/python2.7/dist-packages/requests/ [root@egi-cloud ~]# cp /etc/pki/ca-trust/extracted/pem/tls-ca-bundle.pem . ; cp /etc/pki/ca-trust/extracted/openssl/ca-bundle.trust.crt . [root@egi-cloud ~]# mv cacert.pem cacert.pem.bak; ln -s tls-ca-bundle.pem cacert.pem;

- Configure /etc/caso/caso.conf according to the documentation and test if everything works:

[root@egi-cloud ~]$ mkdir /var/spool/caso /var/log/caso [root@egi-cloud ~]$ caso-extract -v -d

- Create the cron job

[root@egi-cloud ~]# cat /etc/cron.d/caso # extract and send usage records to APEL/SSM 10 * * * * root /usr/local/bin/caso-extract >> /var/log/caso/caso.log 2>&1; chmod go+w -R /var/spool/apel/outgoing/openstack/

Troubleshooting

- Passwordless ssh access to egi-cloud from cld-nagios and from egi-cloud to cloud-0* has been already configured

- If cld-nagios does not ping egi-cloud, be sure that the rule "route add -net 192.168.60.0 netmask 255.255.255.0 gw 192.168.114.1" has been added in egi-cloud (/etc/sysconfig/network-script/route-em1 file should contain the line: 192.168.60.0/24 via 192.168.114.1)

- In case of Nagios alarms, try to restart all cloud services doing the following:

$ ssh root@egi-cloud [root@egi-cloud ~]# ./Ubuntu_Juno_Controller.sh restart [root@egi-cloud ~]# for i in $(seq 1 6); do ssh cloud-0$i.pn.pd.infn.it ./Ubuntu_Juno_Compute.sh restart; done

- Resubmit the Nagios probe and check if it works again

- In case the problem persist, check the consistency of the DB by executing:

[root@egi-cloud ~]# python nova-quota-sync.py

- In case of EGI Nagios alarm, check that the user running the Nagios probes is not belonging also to tenants other than "ops"

- in case of reboot of egi-cloud server:

- check its network configuration (use IPMI if not reachable): all 3 interfaces must be up and the default gateway must be 192.168.114.1

- check DNS in /etc/resolv.conf

- check routing with $route -n, if needed do: $route add default gw 192.168.114.1 dev em1 and $route del default gw 90.147.77.254 dev em3. Also be sure not to have a route for 90.147.77.0 network.

- disable the keystone native service (do: $ service keystone stop) and restart all cloud services

- check if gluster mountpoints are properly mounted

- in case of reboot of cloud-0* server (use IPMI if not reachable): all 2 interfaces must be up and the default gateway must be 192.168.114.1

- check its network configuration

- check if all partitions in /etc/fstab are properly mounted (do: $ df -h)

progetti/cloud-areapd/egi_federated_cloud/juno-ubuntu1404_testbed.txt · Last modified: 2016/09/23 16:16 by verlato@infn.it