Table of Contents

Rocky-CentOS7 Testbed

Fully integrated Resource Provider INFN-PADOVA-STACK in production since 4 February 2019, decommissioned the 18 September 2023.

EGI Monitoring/Accounting

Local Monitoring/Accounting

Local dashboard

Layout

- Controller + Network node: egi-cloud.pd.infn.it

- Compute nodes: cloud-01:07.pn.pd.infn.it

- Storage node (images and block storage): cld-stg-01.pd.infn.it

- OneData provider: one-data-01.pd.infn.it

- Cloudkeeper, Cloudkeeper-OS, cASO and cloudBDII: egi-cloud-ha.pd.infn.it

- Cloud site-BDII: egi-cloud-sbdii.pd.infn.it (VM on cert-03 server)

- Accounting SSM sender: cert-37.pd.infn.it (VM on cert-03 server)

- Network layout available here (authorized users only)

OpenStack configuration

Controller/Network node and Compute nodes were installed according to OpenStack official documentation

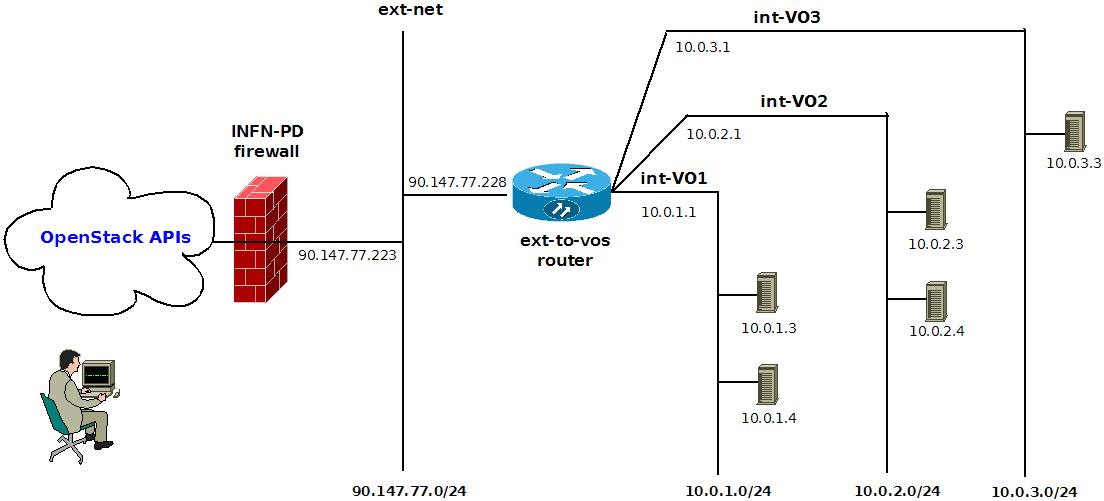

We created one project for each EGI FedCloud VO supported, a router and various nets and subnets obtaining the following network topology:

We mount the partitions for the glance and cinder services (cinder not in the fstab file) from 192.168.61.100 with nfs driver:

yum install -y nfs-utils mkdir -p /var/lib/glance/images cat<<EOF>>/etc/fstab 192.168.61.100:/glance-egi /var/lib/glance/images nfs defaults EOF mount -a

We use some specific configurations for cinder services using the following documentation cinder with NFS backend.

EGI FedCloud specific configuration

(see EGI Doc)

Install CAs Certificates and the software for fetching the CRLs in both Controller (egi-cloud) Compute (cloud-01:07) nodes and egi-cloud-ha node:

systemctl stop httpd curl -L http://repository.egi.eu/sw/production/cas/1/current/repo-files/EGI-trustanchors.repo | sudo tee /etc/yum.repos.d/EGI-trustanchors.repo yum install -y ca-policy-egi-core fetch-crl http://artifacts.pd.infn.it/packages/CAP/misc/CentOS7/noarch/ca_TERENA-SSL-CA-3-1.0-1.el7.centos.noarch.rpm systemctl enable fetch-crl-cron.service systemctl start fetch-crl-cron.service cd /etc/pki/ca-trust/source/anchors ln -s /etc/grid-security/certificates/*.pem . update-ca-trust extract

On egi-cloud-ha node also install CMD-OS repo:

yum -y install http://repository.egi.eu/sw/production/cmd-os/1/centos7/x86_64/base/cmd-os-release-1.0.1-1.el7.centos.noarch.rpm

Install AAI integration and VOMS support components

Taken from official EGI doc.

To be executed on egi-cloud.pd.infn.it node:

vo=(ops dteam fedcloud.egi.eu enmr.eu) volast=enmr.eu EGIHOST=egi-cloud.pd.infn.it KYPORT=443 HZPORT=8443 yum install -y gridsite mod_auth_openidc sed -i "s|443|8443|g" /etc/httpd/conf.d/ssl.conf sed -i "s|/etc/pki/tls/certs/localhost.crt|/etc/grid-security/hostcert.pem|g" /etc/httpd/conf.d/ssl.conf sed -i "s|/etc/pki/tls/private/localhost.key|/etc/grid-security/hostkey.pem|g" /etc/httpd/conf.d/ssl.conf openstack-config --set /etc/keystone/keystone.conf auth methods password,token,openid,mapped openstack-config --set /etc/keystone/keystone.conf openid remote_id_attribute HTTP_OIDC_ISS openstack-config --set /etc/keystone/keystone.conf federation trusted_dashboard https://$EGIHOST:$HZPORT/dashboard/auth /websso/ curl -L https://raw.githubusercontent.com/openstack/keystone/master/etc/sso_callback_template.html > /etc/keystone/sso_ callback_template.html systemctl restart httpd.service source admin-openrc.sh openstack identity provider create --remote-id https://aai-dev.egi.eu/oidc/ egi.eu echo [ > mapping.egi.json echo [ > mapping.voms.json for i in ${vo[@]} do openstack group create $i openstack role add member --group $i --project VO:$i groupid=$(openstack group show $i -f value -c id) cat <<EOF>>mapping.egi.json { "local": [ { "user": { "type":"ephemeral", "name":"{0}" }, "group": { "id": "$groupid" } } ], "remote": [ { "type": "HTTP_OIDC_SUB" }, { "type": "HTTP_OIDC_ISS", "any_one_of": [ "https://aai-dev.egi.eu/oidc/" ] }, { "type": "OIDC-edu_person_entitlements", "regex": true, "any_one_of": [ "^urn:mace:egi.eu:group:$i:role=vm_operator#aai.egi.eu$" ] } ] EOF [ $i = $volast ] || ( echo "}," >> mapping.egi.json ) [ $i = $volast ] && ( echo "}" >> mapping.egi.json ) [ $i = $volast ] && ( echo "]" >> mapping.egi.json ) cat <<EOF>>mapping.voms.json { "local": [ { "user": { "type":"ephemeral", "name":"{0}" }, "group": { "id":"$groupid" } } ], "remote": [ { "type":"GRST_CONN_AURI_0" }, { "type":"GRST_VOMS_FQANS", "any_one_of":[ "^/$i/.*" ], "regex":true } ] EOF [ $i = $volast ] || ( echo "}," >> mapping.voms.json ) [ $i = $volast ] && ( echo "}" >> mapping.voms.json ) [ $i = $volast ] && ( echo "]" >> mapping.voms.json ) done openstack mapping create --rules mapping.egi.json egi-mapping openstack federation protocol create --identity-provider egi.eu --mapping egi-mapping openid openstack mapping create --rules mapping.voms.json voms openstack federation protocol create --identity-provider egi.eu --mapping voms mapped mkdir -p /etc/grid-security/vomsdir/${vo[0]} cat > /etc/grid-security/vomsdir/${vo[0]}/lcg-voms2.cern.ch.lsc <<EOF /DC=ch/DC=cern/OU=computers/CN=lcg-voms2.cern.ch /DC=ch/DC=cern/CN=CERN Grid Certification Authority EOF cat > /etc/grid-security/vomsdir/${vo[0]}/voms2.cern.ch.lsc <<EOF /DC=ch/DC=cern/OU=computers/CN=voms2.cern.ch /DC=ch/DC=cern/CN=CERN Grid Certification Authority EOF mkdir -p /etc/grid-security/vomsdir/${vo[1]} cat > /etc/grid-security/vomsdir/${vo[1]}/voms2.hellasgrid.gr.lsc <<EOF /C=GR/O=HellasGrid/OU=hellasgrid.gr/CN=voms2.hellasgrid.gr /C=GR/O=HellasGrid/OU=Certification Authorities/CN=HellasGrid CA 2016 EOF cat > /etc/grid-security/vomsdir/${vo[1]}/voms.hellasgrid.gr.lsc <<EOF /C=GR/O=HellasGrid/OU=hellasgrid.gr/CN=voms.hellasgrid.gr /C=GR/O=HellasGrid/OU=Certification Authorities/CN=HellasGrid CA 2016 EOF mkdir -p /etc/grid-security/vomsdir/${vo[2]} cat > /etc/grid-security/vomsdir/${vo[2]}/voms1.grid.cesnet.cz.lsc <<EOF /DC=cz/DC=cesnet-ca/O=CESNET/CN=voms1.grid.cesnet.cz /DC=cz/DC=cesnet-ca/O=CESNET CA/CN=CESNET CA 3 EOF cat > /etc/grid-security/vomsdir/${vo[0]}/voms2.grid.cesnet.cz.lsc <<EOF /DC=cz/DC=cesnet-ca/O=CESNET/CN=voms2.grid.cesnet.cz /DC=cz/DC=cesnet-ca/O=CESNET CA/CN=CESNET CA 3 EOF mkdir -p /etc/grid-security/vomsdir/${vo[3]} cat > /etc/grid-security/vomsdir/${vo[3]}/voms2.cnaf.infn.it.lsc <<EOF /C=IT/O=INFN/OU=Host/L=CNAF/CN=voms2.cnaf.infn.it /C=IT/O=INFN/CN=INFN Certification Authority EOF cat > /etc/grid-security/vomsdir/${vo[3]}/voms-02.pd.infn.it.lsc <<EOF /DC=org/DC=terena/DC=tcs/C=IT/L=Frascati/O=Istituto Nazionale di Fisica Nucleare/CN=voms-02.pd.infn.it /C=NL/ST=Noord-Holland/L=Amsterdam/O=TERENA/CN=TERENA eScience SSL CA 3 EOF # cat <<EOF>/etc/httpd/conf.d/wsgi-keystone-oidc-voms.conf Listen $KYPORT <VirtualHost *:$KYPORT> OIDCSSLValidateServer Off OIDCProviderTokenEndpointAuth client_secret_basic OIDCResponseType "code" OIDCClaimPrefix "OIDC-" OIDCClaimDelimiter ; OIDCScope "openid profile email refeds_edu eduperson_entitlement" OIDCProviderMetadataURL https://aai-dev.egi.eu/oidc/.well-known/openid-configuration OIDCClientID <your OIDC client token> OIDCClientSecret <yout OIDC client secret> OIDCCryptoPassphrase somePASSPHRASE OIDCRedirectURI https://$EGIHOST:$KYPORT/v3/auth/OS-FEDERATION/websso/openid/redirect # OAuth for CLI access OIDCOAuthIntrospectionEndpoint https://aai-dev.egi.eu/oidc/introspect OIDCOAuthClientID <yout OIDC client token> OIDCOAuthClientSecret <yout OIDC client secret> # OIDCOAuthRemoteUserClaim sub # Increase Shm cache size for supporting long entitlements OIDCCacheShmEntrySizeMax 33297 # Use the IGTF trust anchors for CAs and CRLs SSLCACertificatePath /etc/grid-security/certificates/ SSLCARevocationPath /etc/grid-security/certificates/ SSLCACertificateFile $CA_CERT SSLEngine on SSLCertificateFile /etc/grid-security/hostcert.pem SSLCertificateKeyFile /etc/grid-security/hostkey.pem # Verify clients if they send their certificate SSLVerifyClient optional SSLVerifyDepth 10 SSLOptions +StdEnvVars +ExportCertData SSLProtocol all -SSLv2 SSLCipherSuite ALL:!ADH:!EXPORT:!SSLv2:RC4+RSA:+HIGH:+MEDIUM:+LOW WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP} WSGIProcessGroup keystone-public WSGIScriptAlias / /usr/bin/keystone-wsgi-public WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On LimitRequestBody 114688 <IfVersion >= 2.4> ErrorLogFormat "%{cu}t %M" </IfVersion> ErrorLog /var/log/httpd/keystone.log CustomLog /var/log/httpd/keystone_access.log combined <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory> <Location /v3/OS-FEDERATION/identity_providers/egi.eu/protocols/mapped/auth> # populate ENV variables GridSiteEnvs on # turn off directory listings GridSiteIndexes off # accept GSI proxies from clients GridSiteGSIProxyLimit 4 # disable GridSite method extensions GridSiteMethods "" Require all granted Options -MultiViews </Location> <Location ~ "/v3/auth/OS-FEDERATION/websso/openid"> AuthType openid-connect Require valid-user #Require claim iss:https://aai-dev.egi.eu/ LogLevel debug </Location> <Location ~ "/v3/OS-FEDERATION/identity_providers/egi.eu/protocols/openid/auth"> Authtype oauth20 Require valid-user #Require claim iss:https://aai-dev.egi.eu/ LogLevel debug </Location> </VirtualHost> Alias /identity /usr/bin/keystone-wsgi-public <Location /identity> SetHandler wsgi-script Options +ExecCGI WSGIProcessGroup keystone-public WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On </Location> EOF sed -i "s|http://$EGIHOST:$KYPORT|https://$EGIHOST|g" /etc/*/*.conf source admin-openrc.sh for i in public internal admin do keyendid=$(openstack endpoint list --service keystone --interface $i -f value -c ID) openstack endpoint set --url https://$EGIHOST/v3 $keyendid done systemctl restart httpd.service

OpenStack Dashboard (Horizon) Configuration:

- Edit /etc/openstack-dashboard/local_settings file and set:

OPENSTACK_KEYSTONE_URL = "https://%s/v3" % OPENSTACK_HOST WEBSSO_ENABLED = True WEBSSO_INITIAL_CHOICE = "credentials" WEBSSO_CHOICES = ( ("credentials", _("Keystone Credentials")), (""openid", _("EGI Check-in")) )

To change the dashboard logo, copy the right svg file in /usr/share/openstack-dashboard/openstack_dashboard/static/dashboard/img/logo-splash.svg

For publicly exposing on https some OpenStack services do not forget to create the files /etc/httpd/conf.d/wsgi-nova,neutron,glance,cinder.conf and set the corresponding endpoints before to restart everyhting.

Install FedCloud BDII

(See EGI integration guide and BDII configuration guide) Installing the resource bdii and the cloud-info-provider in egi-cloud-ha (with CMD-OS repo already installed):

yum -y install bdii cloud-info-provider cloud-info-provider-openstack

Customize the configuration file /etc/cloud-info-provider/sample.openstack.yaml with the local sites' infos, and rename it /etc/cloud-info-provider/openstack.yaml

Customize the file /etc/cloud-info-provider/openstack.rc with the right credential, for example:

export OS_AUTH_URL=https://egi-cloud.pd.infn.it:443/v3 export OS_PROJECT_DOMAIN_ID=default export OS_REGION_NAME=RegionOne export OS_USER_DOMAIN_ID=default export OS_PROJECT_NAME=admin export OS_IDENTITY_API_VERSION=3 export OS_USERNAME=accounting export OS_PASSWORD=<the user password> export OS_AUTH_TYPE=password export OS_CACERT=/etc/pki/tls/certs/ca-bundle.crt

Create the file /var/lib/bdii/gip/provider/cloud-info-provider that calls the provider with the correct options for your site, for example:

cat<<EOF>/var/lib/bdii/gip/provider/cloud-info-provider #!/bin/sh . /etc/cloud-info-provider/openstack.rc for P in $(openstack project list -c Name -f value); do cloud-info-provider-service --yaml /etc/cloud-info-provider/openstack.yaml \ --os-tenant-name $P \ --middleware openstack done EOF

Run manually the cloud-info-provider script and check that the output return the complete LDIF. To do so, execute:

chmod +x /var/lib/bdii/gip/provider/cloud-info-provider /var/lib/bdii/gip/provider/cloud-info-provider /sbin/chkconfig bdii on

Now you can start the bdii service:

systemctl start bdii

Use the command below to see if the information is being published:

ldapsearch -x -h localhost -p 2170 -b o=glue

Do not forget to open port 2170:

firewall-cmd --add-port=2170/tcp firewall-cmd --permanent --add-port=2170/tcp systemctl restart firewalld

Information on how to set up the site-BDII in egi-cloud-sbdii.pd.infn.it is available here

Add your cloud-info-provider to your site-BDII egi-cloud-sbdii.pd.infn.it by adding new lines in the site.def like this:

BDII_REGIONS="CLOUD BDII" BDII_CLOUD_URL="ldap://egi-cloud-ha.pn.pd.infn.it:2170/GLUE2GroupID=cloud,o=glue" BDII_BDII_URL="ldap://egi-cloud-sbdii.pd.infn.it:2170/mds-vo-name=resource,o=grid"

Use the same APEL/SSM of grid site

Cloud usage records are sent to APEL through the ssmsend program installed in cert-37.pd.infn.it:

[root@cert-37 ~]# cat /etc/cron.d/ssm-cloud # send buffered usage records to APEL 30 */24 * * * root /usr/bin/ssmsend -c /etc/apel/sender-cloud.cfg

It is therefore neede to install and configure NFS on egi-cloud-ha:

[root@egi-cloud-ha ~]# yum -y install nfs-utils [root@egi-cloud-ha ~]# mkdir -p /var/spool/apel/outgoing/openstack [root@egi-cloud-ha ~]# cat<<EOF>>/etc/exports /var/spool/apel/outgoing/openstack cert-37.pd.infn.it(rw,sync) EOF [root@egi-cloud-ha ~]# systemctl start nfs-server

In case of APEL nagios probe failure, check if /var/spool/apel/outgoing/openstack is properly mounted by cert-37

To check if accounting records are properly received by APEL server look at this site

Install the accounting system (cASO)

(see cASO installation guide )

On egi-cloud create accounting user and role, and set the proper policies:

openstack user create --domain default --password <ACCOUNTIN_PASSWORD> accounting openstack role create accounting for i in VO:fedcloud.egi.eu VO:enmr.eu VO:ops; do openstack role add --project $i --user accounting accounting; done cat<<EOF>>/etc/keystone/policy.json "accounting_role": "role:accounting" "identity:list_users": "rule:admin_required or rule:accounting_role" EOF

Install cASO on egi-cloud-ha (with CMD-OS repo already installed):

yum -y install caso

Edit the /etc/caso/caso.conf file

openstack-config --set /etc/caso/caso.conf DEFAULT site_name INFN-PADOVA-STACK openstack-config --set /etc/caso/caso.conf DEFAULT projects VO:ops,VO:fedcloud.egi.eu,VO:enmr.eu openstack-config --set /etc/caso/caso.conf DEFAULT messengers caso.messenger.ssm.SSMMessengerV02 openstack-config --set /etc/caso/caso.conf DEFAULT log_dir /var/log/caso openstack-config --set /etc/caso/caso.conf DEFAULT log_file caso.log openstack-config --set /etc/caso/caso.conf keystone_auth auth_type password openstack-config --set /etc/caso/caso.conf keystone_auth username accounting openstack-config --set /etc/caso/caso.conf keystone_auth password ACCOUNTING_PASSWORD openstack-config --set /etc/caso/caso.conf keystone_auth auth_url https://egi-cloud.pd.infn.it/v3 openstack-config --set /etc/caso/caso.conf keystone_auth cafile /etc/pki/tls/certs/ca-bundle.crt openstack-config --set /etc/caso/caso.conf keystone_auth project_domain_id default openstack-config --set /etc/caso/caso.conf keystone_auth project_domain_name default openstack-config --set /etc/caso/caso.conf keystone_auth user_domain_id default openstack-config --set /etc/caso/caso.conf keystone_auth user_domain_name default

Create the directories

mkdir /var/spool/caso /var/log/caso /var/spool/apel/outgoing/openstack/

Test it

caso-extract -v -d

Create the cron job

cat <<EOF>/etc/cron.d/caso # extract and send usage records to APEL/SSM 10 * * * * root /usr/bin/caso-extract >> /var/log/caso/caso.log 2>&1 ; chmod go+w -R /var/spool/apel/outgoing/openstack/ EOF

Install Cloudkeeper and Cloudkeeper-OS

On egi-cloud.pd.infn.it create a cloudkeeper user in keystone:

openstack user create --domain default --password CLOUDKEEPER_PASS cloudkeeper

and, for each project, add the cloudkeeper user with the user role

for i in VO:ops VO:fedcloud.egi.eu VO:enmr.eu; do openstack role add --project $i --user cloudkeeper user; done

Install Cloudkeeper and Cloudkeeper-OS on egi-cloud-ha (with CMD-OS repo already installed):

yum -y install cloudkeeper cloudkeeper-os

Edit /etc/cloudkeeper/cloudkeeper.yml and add the list of VO image lists and the IP address where needed:

- https://PERSONAL_ACCESS_TOKEN:x-oauth-basic@vmcaster.appdb.egi.eu/store/vo/fedcloud.egi.eu/image.list - https://PERSONAL_ACCESS_TOKEN:x-oauth-basic@vmcaster.appdb.egi.eu/store/vo/ops/image.list - https://PERSONAL_ACCESS_TOKEN:x-oauth-basic@vmcaster.appdb.egi.eu/store/vo/enmr.eu/image.list

Edit the /etc/cloudkeeper-os/cloudkeeper-os.conf file

openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf DEFAULT log_file cloudkeeper-os.log openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf DEFAULT log_dir /var/log/cloudkeeper-os/ openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken auth_url https://egi-cloud.pd.infn.it/v3 openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken username cloudkeeper openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken password CLOUDKEEPER_PASS openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken cafile /etc/pki/tls/certs/ca-bundle.crt openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken cacert /etc/pki/tls/certs/ca-bundle.crt openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken user_domain_name default openstack-config --set /etc/cloudkeeper-os/cloudkeeper-os.conf keystone_authtoken project_domain_name default

Creating the /etc/cloudkeeper-os/voms.json mapping file:

cat<<EOF>/etc/cloudkeeper-os/voms.json { "ops": { "tenant": "VO:ops" }, "enmr.eu": { "tenant": "VO:enmr.eu" }, "fedcloud.egi.eu": { "tenant": "VO:fedcloud.egi.eu" } } EOF

Enable and start the services

systemctl enable cloudkeeper-os systemctl start cloudkeeper-os systemctl enable cloudkeeper.timer systemctl start cloudkeeper.timer

Installing Squid for CVMFS (optional)

Install and configure squid on cloud-01 and cloud-02 for use from VMs (see https://cvmfs.readthedocs.io/en/stable/cpt-squid.html):

yum install -y squid sed -i "s|/var/spool/squid|/export/data/spool/squid|g" /etc/squid/squid.conf cat<<EOF>>/etc/squid/squid.conf minimum_expiry_time 0 max_filedesc 8192 maximum_object_size 1024 MB cache_mem 128 MB maximum_object_size_in_memory 128 KB # 50 GB disk cache cache_dir ufs /export/data/spool/squid 50000 16 256 acl cvmfs dst cvmfs-stratum-one.cern.ch acl cvmfs dst cernvmfs.gridpp.rl.ac.uk acl cvmfs dst cvmfs.racf.bnl.gov acl cvmfs dst cvmfs02.grid.sinica.edu.tw acl cvmfs dst cvmfs.fnal.gov acl cvmfs dst cvmfs-atlas-nightlies.cern.ch acl cvmfs dst cvmfs-egi.gridpp.rl.ac.uk acl cvmfs dst klei.nikhef.nl acl cvmfs dst cvmfsrepo.lcg.triumf.ca acl cvmfs dst cvmfsrep.grid.sinica.edu.tw acl cvmfs dst cvmfs-s1bnl.opensciencegrid.org acl cvmfs dst cvmfs-s1fnal.opensciencegrid.org http_access allow cvmfs EOF rm -rf /var/spool/squid mkdir -p /export/data/spool/squid chown -R squid.squid /export/data/spool/squid squid -k parse squid -z ulimit -n 8192 systemctl start squid firewall-cmd --permanent --add-port 3128/tcp systemctl restart firewalld

Use CVMFS_HTTP_PROXY="http://cloud-01.pn.pd.infn.it:3128|http://cloud-02.pn.pd.infn.it:3128" on the CVMFS clients.

Actually, better to use already existing squids: CVMFS_HTTP_PROXY="http://squid-01.pd.infn.it:3128|http://squid-02.pd.infn.it:3128"

Local Accounting

A local accounting system based on Grafana, InfluxDB and Collectd has been set up following the instructions here.

Local Monitoring

Ganglia

- Install ganglia-gmond on all servers

- Configure cluster and host fields in /etc/ganglia/gmond.conf to point to cld-ganglia.cloud.pd.infn.it server

- Finally: systemctl enable gmond.service; systemctl start gmond.service

Nagios

- Install on compute nodes nsca-client, nagios, nagios-plugins-disk, nagios-plugins-procs, nagios-plugins, nagios-common, nagios-plugins-load

- Copy the file cld-nagios:/var/spool/nagios/.ssh/id_rsa.pub in a file named /home/nagios/.ssh/authorized_keys of the controller and all compute nodes, and in a file named /root/.ssh/authorized_keys of the controller. Be also sure that /home/nagios is the default directory in the /etc/passwd file.

- Then do in all compute nodes:

$ echo encryption_method=1 >> /etc/nagios/send_nsca.cfg $ usermod -a -G libvirt nagios $ sed -i 's|#password=|password=NSCA_PASSWORD|g' /etc/nagios/send_nsca.cfg # then be sure the files below are in /usr/local/bin: $ ls /usr/local/bin/ check_kvm check_kvm_wrapper.sh $ cat <<EOF > crontab.txt # Puppet Name: nagios_check_kvm 0 */1 * * * /usr/local/bin/check_kvm_wrapper.sh EOF $ crontab crontab.txt $ crontab -l

- On the contoller node, add in /etc/nova/policy.json the line:

"os_compute_api:servers:create:forced_host": ""

and in /etc/cinder/policy.json the line:

"volume_extension:quotas:show": ""

- Create in the VO:dteam project a cirros VM with tiny flavour named nagios-probe and access key named dteam-key (saving the private key file dteam-key.pem in egi-cloud /root directory), and take note of its ID and private IP. Then on the cld-nagios server put its ID in the file /var/spool/nagios/egi-cloud-vm-volume_compute_node.sh and its IP in the file /etc/nagios/objects/egi_fedcloud.cfg, at the command of the service Openstack Check Metadata (e.g.: check_command check_metadata_egi!dteam-key.pem!10.0.2.20).

- On the cld-nagios server check/modify the content of /var/spool/nagios/*egi*.sh, of the files /etc/nagios/objects/egi* and /usr/lib64/nagios/plugins/*egi*, and of the files owned by nagios user found in /var/spool/nagios when doing "su - nagios"

Security incindents and IP traceability

See here for the description of the full process On egi-cloud do install the CNRS tools, they allow to track the usage of floating IPs as in the example below:

[root@egi-cloud ~]# os-ip-trace 90.147.77.229 +--------------------------------------+-----------+---------------------+---------------------+ | device id | user name | associating date | disassociating date | +--------------------------------------+-----------+---------------------+---------------------+ | 3002b1f1-bca3-4e4f-b21e-8de12c0b926e | admin | 2016-11-30 14:01:38 | 2016-11-30 14:03:02 | +--------------------------------------+-----------+---------------------+---------------------+

Save and archive important log files:

- On egi-cloud and each compute node cloud-0%, add the line "*.* @@192.168.60.31:514" in the file /etc/rsyslog.conf, and restart rsyslog service with "systemctl restart rsyslog". It logs /var/log/secure,messages files in cld-foreman:/var/mpathd/log/egi-cloud,cloud-0%.

- In cld-foreman, check that the file /etc/cron.daily/vm-log.sh logs the /var/log/libvirt/qemu/*.log files of egi-cloud and each cloud-0% compute node (passwordless ssh must be enabled from cld-foreman to each node)

Install ulogd in the controller node

yum install -y libnetfilter_log yum localinstall -y http://repo.iotti.biz/CentOS/7/x86_64/ulogd-2.0.5-2.el7.lux.x86_64.rpm yum localinstall -y http://repo.iotti.biz/CentOS/7/x86_64/libnetfilter_acct-1.0.2-3.el7.lux.1.x86_64.rpm

and configure /etc/ulogd.conf by replacing properly accept_src_filter variable (accept_src_filter=10.0.0.0/16) starting from the one in cld-ctrl-01:/etc/ulogd.conf. Then copy cld-ctrl-01:/root/ulogd/start-ulogd to egi-cloud:/root/ulogd/start-ulogd, replace the qrouter ID and execute /root/ulogd/start-ulogd. Then add to /etc/rc.d/rc.local the line /root/ulogd/start-ulogd &, and make rc.local executable. Start the service

systemctl enable ulogd

systemctl start ulogd

Finally, be sure that /etc/rsyslog.conf file has the lines "local6.* /var/log/ulogd.log" and "*.info;mail.none;authpriv.none;cron.none;local6.none /var/log/messages", and restart rsyslog service.

Troubleshooting

- Passwordless ssh access to egi-cloud from cld-nagios and from egi-cloud to cloud-0* has been already configured

- If cld-nagios does not ping egi-cloud, be sure that the rule "route add -net 192.168.60.0 netmask 255.255.255.0 gw 192.168.114.1" has been added in egi-cloud (/etc/sysconfig/network-script/route-em1 file should contain the line: 192.168.60.0/24 via 192.168.114.1)

- In case of Nagios alarms, try to restart all cloud services doing the following:

$ ssh root@egi-cloud [root@egi-cloud ~]# ./rocky_controller.sh restart [root@egi-cloud ~]# for i in $(seq 1 7); do ssh cloud-0$i ./rocky_compute.sh restart; done

- Resubmit the Nagios probe and check if it works again

- In case the problem persist, check the consistency of the DB by executing (this also fix the issue when quota overview in the dashboard is not consistent with actual VMs active):

[root@egi-cloud ~]# python nova-quota-sync.py

- In case of EGI Nagios alarm, check that the user running the Nagios probes is not belonging also to tenants other than "ops". Also check that the right image and flavour is set in URL of the service published in the GOCDB.

- in case of reboot of egi-cloud server:

- check its network configuration (use IPMI if not reachable): all 4 interfaces must be up and the default gateway must be 90.147.77.254.

- check DNS in /etc/resolv.conf and GATEWAY in /etc/sysconfig/network

- check routing with $route -n, if needed do: $ip route replace default via 90.147.77.254. Also be sure to have a route for 90.147.77.0 network.

- check if storage mountpoints 192.168.61.100:/glance-egi and cinder-egi are properly mounted (do: $ df -h)

- check if port 8472 is open on the local firewall (it is used by linuxbridge vxlan networks)

- in case of reboot of cloud-0* server (use IPMI if not reachable): all 3 interfaces must be up and the default destination must have 192.168.114.1 as gateway

- check its network configuration

- check if all partitions in /etc/fstab are properly mounted (do: $ df -h)

- In case of network instabilities, check if GRO if off for all interfaces, e.g.:

[root@egi-cloud ~]# /sbin/ethtool -k em3 | grep -i generic-receive-offload generic-receive-offload: off

- Also check if /sbin/ifup-local is there:

[root@egi-cloud ~]# cat /sbin/ifup-local #!/bin/bash case "$1" in em1) /sbin/ethtool -K $1 gro off ;; em2) /sbin/ethtool -K $1 gro off ;; em3) /sbin/ethtool -K $1 gro off ;; em4) /sbin/ethtool -K $1 gro off ;; esac exit 0

- If you need to change the project quotas, check "openstack help quota set", e.g.:

[root@egi-cloud ~]# source admin-openrc.sh [root@egi-cloud ~]# openstack quota set --cores 184 VO:enmr.eu