Table of Contents

Recover a stuck cinder Volume

List the volumes

$ . ~/keystone_admin.sh $ nova volume-list --all-tenants +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+ | f480ca6a-0b52-499c-94ab-461be861c7fa | available | Biocomp2 | 200 | bulk_storage | | | 7d95cdc2-69fd-4b39-ae89-2abbfdf9bb7f | available | test | 20 | bulk_storage | | | 24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 | in-use | Biocomp | 200 | bulk_storage | 002dc79b-9050-459c-9ec3-f3a53b4bb1fb | <== THIS IS THE BAD GUY | 86a39713-50ba-4885-8fdc-9d7cdf3d16a8 | in-use | swap_volume | 16 | bulk_storage | 55778ac6-e39e-4760-bbcb-7a7551f7a482 | | 1fb65656-f358-4ab8-8baa-fb0c48a0e18d | in-use | data-1 | 500 | bulk_storage | 55778ac6-e39e-4760-bbcb-7a7551f7a482 | | 6feb1a3b-2257-4c3d-84f9-24a476ea64c6 | available | test | 1 | bulk_storage | | +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+

- Try to detach it the 'normal' way

$ nova volume-detach 002dc79b-9050-459c-9ec3-f3a53b4bb1fb 24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 ERROR: The resource could not be found. (HTTP 404) (Request-ID: req-8ecd1359-f5ae-440f-96cf-f8f8217cdff6)

NOTE: the operation failed maybe because the VM 002dc79b-9050-459c-9ec3-f3a53b4bb1fb was already destroyed.

Find the volume on the iscsi storage using Volume-Display-Name

KILO release cinderDB

- Connect to the mysql db

mysql -u root -p

mysql> use cinder;

Database changed

mysql> SELECT * from volumes where display_name = 'orar-data';

mysql> SELECT id,size,status,attach_status,display_name from volumes where display_name = 'orar-data';

+--------------------------------------+------+-----------+---------------+--------------+

| id | size | status | attach_status | display_name |

+--------------------------------------+------+-----------+---------------+--------------+

| f4f7de64-7323-43ac-93f6-48d2314cfa6e | 3000 | in-use | attached | orar-data |

+--------------------------------------+------+-----------+---------------+--------------+

1 row in set (0.00 sec)

mysql> update volumes SET status="available", attach_status="detached" where id="f4f7de64-7323-43ac-93f6-48d2314cfa6e";

mysql> SELECT id,size,status,attach_status,display_name from volumes where display_name = 'orar-data';

+--------------------------------------+------+-----------+---------------+--------------+

| id | size | status | attach_status | display_name |

+--------------------------------------+------+-----------+---------------+--------------+

| f4f7de64-7323-43ac-93f6-48d2314cfa6e | 3000 | available | detached | orar-data |

+--------------------------------------+------+-----------+---------------+--------------+

1 row in set (0.00 sec)

mysql> quit;

Find the volume on the iscsi storage

- Connect to the mysql db

mysql -u root -p

mysql> use cinder;

Database changed

mysql> set @volid='24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1';

mysql> select project_id,size,instance_uuid,mountpoint from volumes where id=@volid;

+----------------------------------+------+--------------------------------------+------------+

| project_id | size | instance_uuid | mountpoint |

+----------------------------------+------+--------------------------------------+------------+

| cc6353c145fd49adb7564e2ebe4f3f91 | 200 | 002dc79b-9050-459c-9ec3-f3a53b4bb1fb | /dev/vdb |

+----------------------------------+------+--------------------------------------+------------+

1 row in set (0.00 sec)

mysql> select display_name,display_description,provider_location from volumes where id=@volid;

+--------------+---------------------+------------------------------------------------------------------------------------------------------------------------------------+

| display_name | display_description | provider_location |

+--------------+---------------------+------------------------------------------------------------------------------------------------------------------------------------+

| Biocomp | biocomp's storage | 192.168.40.100:3260,1 iqn.2001-05.com.equallogic:0-fe83b6-a257200c3-d4200647e175661c-volume-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 0 |

+--------------+---------------------+------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

volume-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 is the name of the volume on the iscsi storage.

- Connect to the iscsi storage from cld-blu-01 or cld-blu-02

$ ssh grpadmin@172.16.36.13

grpadmin@172.16.36.13's password:

Last login: Mon Dec 14 09:55:50 2015 from 192.168.40.120 on tty??

Welcome to Group Manager

Copyright 2001-2014 Dell Inc.

CloudUnipdVeneto>

- create a copy of the volume (just for security)

CloudUnipdVeneto> vol show Name Size Snapshots Status Permission Connections T --------------- ---------- --------- ------- ---------- ----------- - volume-6feb1a3b 1GB 0 online read-write 0 Y -2257-4c3d-84 f9-24a476ea64 c6 volume-1fb65656 500GB 0 online read-write 1 Y -f358-4ab8-8b aa-fb0c48a0e1 8d volume-86a39713 16GB 0 online read-write 1 Y -50ba-4885-8f dc-9d7cdf3d16 a8 volume-24aa5fc3 200GB 1 online read-write 0 Y -0f08-4910-a3 54-6ed4d1fe8e a1 ... ... CloudUnipdVeneto> vol select volume-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 CloudUnipdVeneto(volume_volume-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1)> clone copy-of-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 Volume creation succeeded. iSCSI target name is iqn.2001-05.com.equallogic:0-fe83b6-a257200c3-d4200647e175661c-copy-of-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 CloudUnipdVeneto(volume_volume-24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1)> exit CloudUnipdVeneto> logout

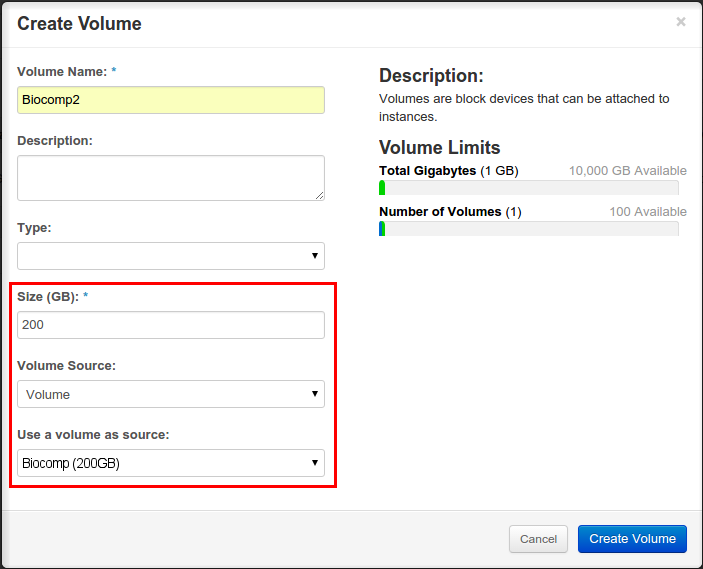

Make a copy of the volume from the dashboard

Try to use the copy the normal way

- Attach the volume to a working VM

- Check the contents are fine

- Detach the volume

Manually detach the stuck volume via DB

$ mysql -u root -p mysql> set @volid='24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1'; Query OK, 0 rows affected (0.01 sec) mysql> set @instance='002dc79b-9050-459c-9ec3-f3a53b4bb1fb'; Query OK, 0 rows affected (0.01 sec) mysql> use nova; Database changed mysql> delete from block_device_mapping where not deleted and volume_id=@volid and instance_uuid=@instance; Query OK, 1 rows affected (0.01 sec) mysql> use cinder; Database changed mysql> select instance_uuid,attach_time,status,attach_status,mountpoint from volumes where id=@volid; +--------------------------------------+----------------------------+--------+---------------+------------+ | instance_uuid | attach_time | status | attach_status | mountpoint | +--------------------------------------+----------------------------+--------+---------------+------------+ | 002dc79b-9050-459c-9ec3-f3a53b4bb1fb | 2015-12-10T09:27:18.192892 | in-use | attached | /dev/vdb | +--------------------------------------+----------------------------+--------+---------------+------------+ mysql> update volumes set instance_uuid=NULL,attach_time=NULL,status='available',attach_status='detached',mountpoint=NULL where id=@volid; Query OK, 1 rows affected (0.01 sec)

Check the volume is again available

$ nova volume-list --all-tenants +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+ | f480ca6a-0b52-499c-94ab-461be861c7fa | available | Biocomp2 | 200 | bulk_storage | | | 7d95cdc2-69fd-4b39-ae89-2abbfdf9bb7f | available | test | 20 | bulk_storage | | | 24aa5fc3-0f08-4910-a354-6ed4d1fe8ea1 | available | Biocomp | 200 | bulk_storage | | <== THIS *WAS* THE BAD GUY | 86a39713-50ba-4885-8fdc-9d7cdf3d16a8 | in-use | swap_volume | 16 | bulk_storage | 55778ac6-e39e-4760-bbcb-7a7551f7a482 | | 1fb65656-f358-4ab8-8baa-fb0c48a0e18d | in-use | data-1 | 500 | bulk_storage | 55778ac6-e39e-4760-bbcb-7a7551f7a482 | | 6feb1a3b-2257-4c3d-84f9-24a476ea64c6 | available | test | 1 | bulk_storage | | +--------------------------------------+-----------+--------------+------+--------------+--------------------------------------+

Delete the original volume / rename the copy

It should be now possible to delete the volume from the dashboard. This is necessary because the volume has somewhat become toxic, e.g. if you try to use it again it will again become stuck. Since you made a copy (actually two of them…) it should be safely deleted.

After that you can use the dashboard to rename the volume copy after the original one.

Transfer ownership of the volume!

Since you created the volume as the admin the ownership of the volume is 'admin'.

You must transfer the ownership to the user which created the original one. To perform such task:

- As the admin user:

- Modify the 'Volume quota' for the project if necessary: during the ownership transfer the volume is initially duplicated so extra space is allocated (and then freed). Quota can be safely restored after the procedure ends;

- Download the rc file of the project and the chain.pem file (gunzip it)

- Modify the rc file: put the chain.pem file in the same directory and add

export OS_CACERT=./chain.peminside the rc file - Source the rc file and list the project volume:

$ . testing-openrc-admin.sh Please enter your OpenStack Password: $ cinder list +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+ | ID | Status | Display Name | Size | Volume Type | Bootable | Attached to | +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+ | a79bbf87-112b-471b-bd0c-6d938da57b4c | available | uno | 5 | None | false | | +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

- Create a transfer request for the volume

$ cinder transfer-create a79bbf87-112b-471b-bd0c-6d938da57b4c +------------+--------------------------------------+ | Property | Value | +------------+--------------------------------------+ | auth_key | a182392984ce82b7 | | created_at | 2015-12-16T14:08:37.374753 | | id | d01cf5a1-5e3d-466a-9f4e-0efcca8e818c | | name | None | | volume_id | a79bbf87-112b-471b-bd0c-6d938da57b4c | +------------+--------------------------------------+

- Mail the id and auth_key to the new owner (

d01cf5a1-5e3d-466a-9f4e-0efcca8e818canda182392984ce82b7in this case)

- As the new user

- Set a password for your user. The rc file doesn't work through the SSO mechanism;

- Download the rc file and the chain.pem file (gunzip it)

- Modify the rc file: put the chain.pem file in the same directory and add export

OS_CACERT=./chain.peminside the rc file - Source the rc file and accept the transfer:

$ . testing-openrc-admin.sh Please enter your OpenStack Password: $ cinder transfer-accept d01cf5a1-5e3d-466a-9f4e-0efcca8e818c a182392984ce82b7 +-----------+--------------------------------------+ | Property | Value | +-----------+--------------------------------------+ | id | d01cf5a1-5e3d-466a-9f4e-0efcca8e818c | | name | None | | volume_id | a79bbf87-112b-471b-bd0c-6d938da57b4c | +-----------+--------------------------------------+